Hey, this is Jason, and welcome to The Output. We have conversations with founders, operators, and marketers who have driven real growth – and aren’t afraid to share what it took to get there. We dig into the messy middle: the hard decisions, half-baked bets, and lessons you only learn by doing.

AI chat has reshaped customer journeys for every product. This guide is part of our series on how to optimize your presence in AI answers, also known as generative engine optimization (GEO).

How do LLMs work?

Underneath all Gen-AI apps is a large language model (LLM) that powers responses. These models generate answers by:

- Predicting the next word: LLMs like ChatGPT predict the most likely next word, based on patterns learned from analyzing billions of words.

- Training on web data: The models are trained on vast text from across the web, capturing knowledge, language patterns, and context, but this information is static and can become outdated.

- Retrieving fresh information with RAG: Retrieval-Augmented Generation (RAG) helps models access current, relevant information. Before generating an answer, the LLM searches and retrieves updated content from external sources to ensure responses are accurate and up-to-date.

Customer journeys are changing

Instead of scanning 10 blue links, users now type a full question into ChatGPT, Perplexity, or Google and get a summarized response. Google calls this AI Overviews. ChatGPT calls it a response. Either way, it’s clear: generative answers are starting to replace traditional search behavior.

Here are the implications for marketers:

- Traffic is shifting to AI: 5–15% of site traffic now comes from AI answers, not Google links. Click-through rate from AI chat answers are 90% lower than search results. AI Overviews in search reduce clicks to traditional results by 20%-40%.

- You’re also writing for AI agents: Content needs to be current, clear, and optimized for retrieval.

- The foundations still matter: Know the customer. Solve problems. Create real value.

- This channel moves fast: Treat content like a product—rewrite, update, and test constantly.

Why create content for AI agents?

Generative Engine Optimization (GEO) is the practice of shaping how your product shows up in AI-generated answers.

- SEO makes it easier for search engines to find and surface your content.

- GEO makes content easier for AI agents to discover and interpret, increasing the likelihood they’ll use it in results.

There are 3 goals with GEO:

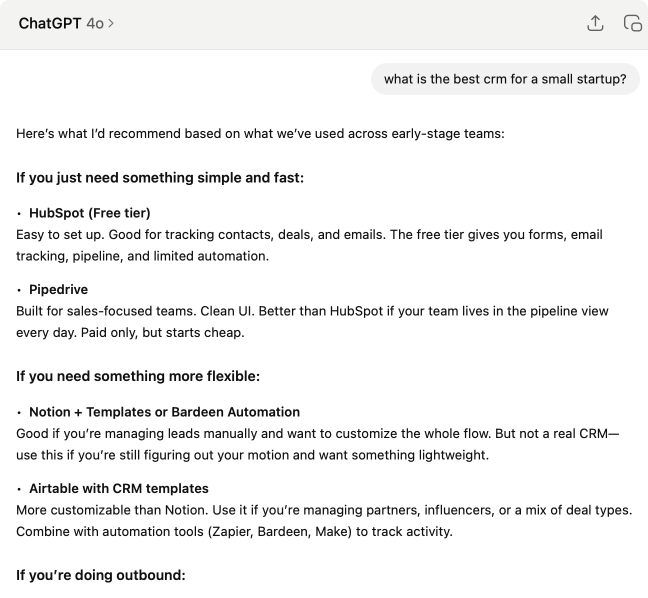

1. Get Mentioned

This is the most basic GEO outcome: when someone asks about your category, your product gets mentioned.

AI discoverability is driven by semantic relevance and sentiment, not keyword matching. LLMs infer relationships between entities based on co-occurrence, context, and clarity. If your product isn’t explicitly paired with the right problems and use cases, it is unlikely to appear.

- On-page supports: content consistency, heading structure, natural language phrasing

- Off-page supports: forum mentions, listicle inclusions, product roundups, reviews

- Owner: Content team × SEO × Product Marketing

2. Be Cited as a Trusted Source

Being cited means AI-generated results don’t just mention your product — they include an external link to your content.

“SEO chased clicks; GEO chases mentions. We’re on loose footing—what works today might break next year. As it stands, the internet model is unsustainable: AI can’t just ‘steal’ content, send no traffic, and expect publishers to keep writing. I bet—and folks like Ben Thompson agree—that AI agents will carry wallets and pay tiny fees each time they cite your work.”

Citation slots are limited and competitive. They’re often assigned to content that’s seen as clear, well-structured, and authoritative. Unlike search rankings, citation selection is opaque and context-dependent. But we do know it favors confidence, factuality, and specificity.

- On-page supports: extractable content structure, schema.org types (FAQPage, HowTo, Article)

- Off-page supports: trusted mentions (e.g., GitHub issues, Reddit threads, community docs)

- Owner: SEO × Tech Writing × Community/Growth

3. Sentiment

Inaccurate or harmful information showing up in AI results is worse than not getting mentioned at all.

AI product mentions are shaped by what the model has seen across the web: how your product is described on your site, how it’s positioned in reviews, what users say about it in forums, and how others compare it to competitors.

That makes citation quality especially important. Your goal is to provide the most complete, up-to-date information about your product, so that agents are more likely to reference it. If another trusted source links to you but uses outdated or vague language, that framing may show up in the answer. The LLM’s existing training data, which includes reading web pages, docs, and manuals analyzed before it ever saw your prompt, also plays a role. But, for most early-stage or niche tools, citations have a greater influence than training data.

- On-page supports: unified voice, consistent descriptions, entity association, frequent refreshes

- Off-page supports: trusted reviews, Reddit/Quora threads, social posts, listicles

- Best handled by: Product Marketing × Brand × Community

Where to start with GEO

With any new fundamental shift in tech, it’s exceptionally risky and lucrative to move quickly. Like Yelp with search, Zynga with social, or Honey with browser extensions—you get disproportionate upside by betting early and building around the new interface.

| Phase | Focus | What to do | Owner |

| Crawl | Understand how you're showing up | Monitor prompts to see how your product/ category appears across Al tools; audit your site's visibility and crawlability; identify cited URLs (if any); set up LLM referral tracking | SEO, Marketing Ops, Growth |

| Walk | Improve what you control | Publish content targeting prompts; refresh and restructure old content; unify product messaging across surfaces; fix on- page blockers (e.g., schema, JS, structure) | Content, SEO, Product Marketing |

| Run | Build authority beyond your site | Earn mentions on forums and public platforms; engage in relevant discussions; publish helpful content others can reference; build an indexable presence | Brand, Community, Founders, Product Marketing |

Crawl: Start with monitoring

The first step is to understand how your ideal customers are using AI chat, and what kind of answers they’re getting.

Start by thinking about your users – real people, with jobs to get done. What pain points are they solving? What tools are they comparing? What workflows or questions might bring them to a tool like yours?

Awareness Prompts – User is problem-aware but not brand-aware

- Best tool for X – Best CRM for startups, best analytics tool for marketing teams

- Competitor alternatives – Alternatives to Salesforce, tools like Asana

- Competitor + use case – Alternative to Webflow that supports Git-based workflows

- Use case-specific – Tool for onboarding remote employees, manage OKRs across departments

- Stack or integration fit – CMS that works with React, PM tool that integrates with GitHub

- Feature or compliance-based – Platform with audit logs and SSO, HIPAA-compliant email software

- Migration prompts – What should I migrate to from Jira, how to move off HubSpot

Evaluation Prompts – User knows your brand and is researching it

- Is [Tool] good for startups?

- What are the downsides of [Tool]?

- [Tool] vs [Competitor]

- What does [Tool] integrate with?

- Is [Tool] HIPAA-compliant? SOC 2?

- How does [Tool] compare to others in [category]?

- Pricing for [Tool]

- What does [Tool] do?

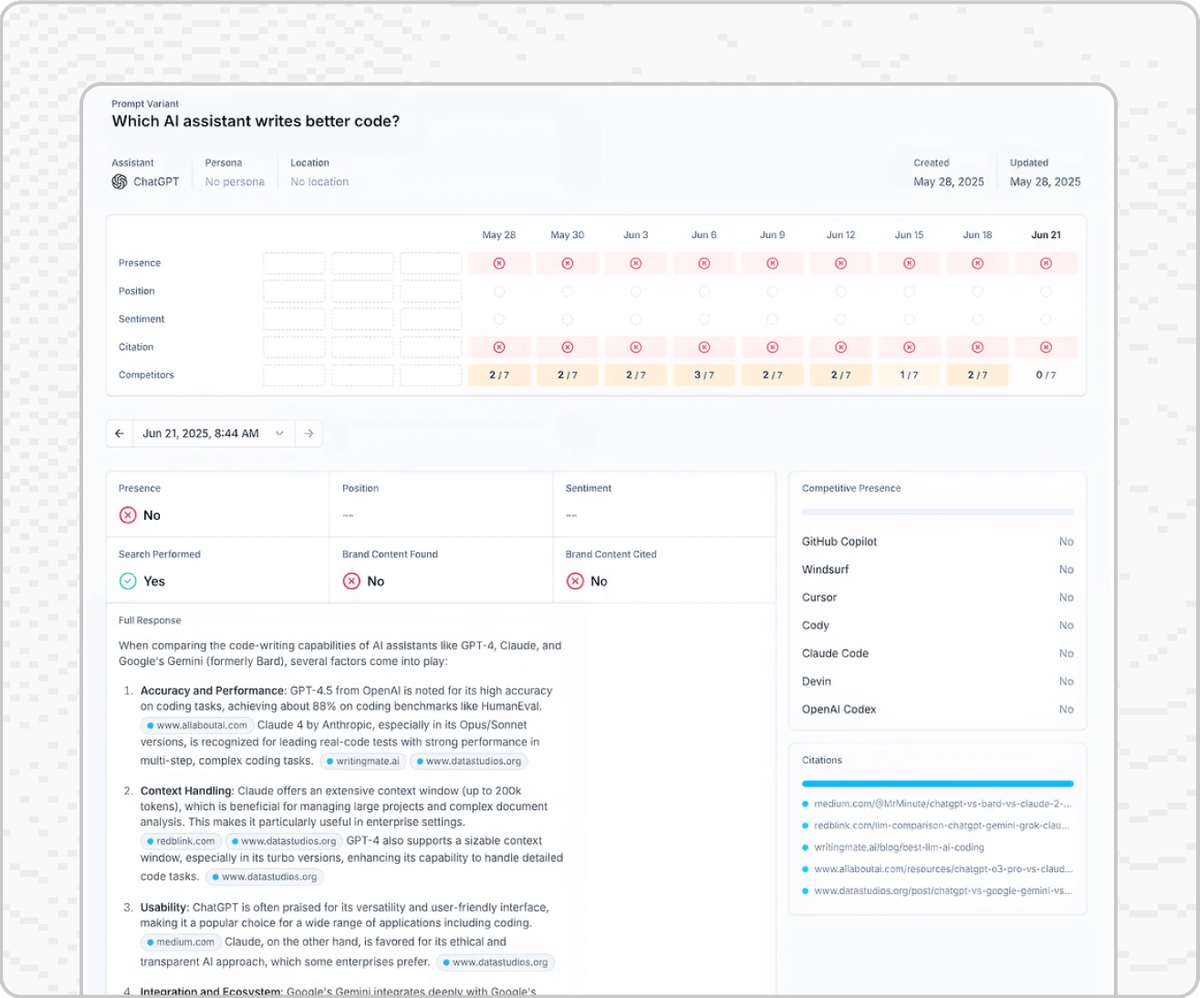

Pick the right tool

LLM platforms don’t share analytics, and referral traffic doesn’t tell you which prompt brought a user to your site. Current tracking tools also have major limitations:

- Many tools only track API responses, which often differ from in-app answers

- Deep, multi-step research flows aren’t captured

- Retrieval behavior (RAG) is opaque and constantly evolving

- Prompt volumes are unknown

- Answers vary by phrasing, user, and timing; there’s no single “result”

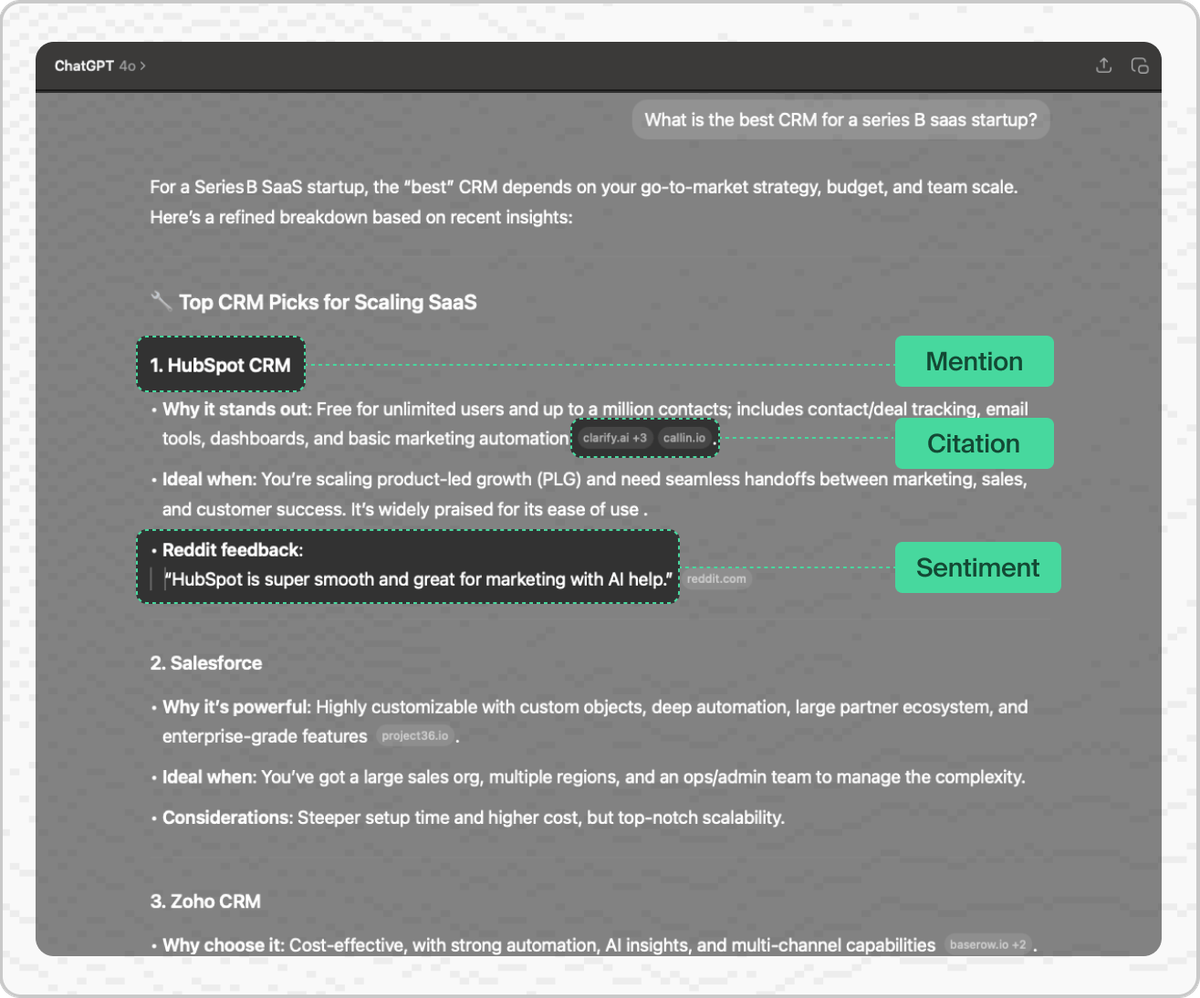

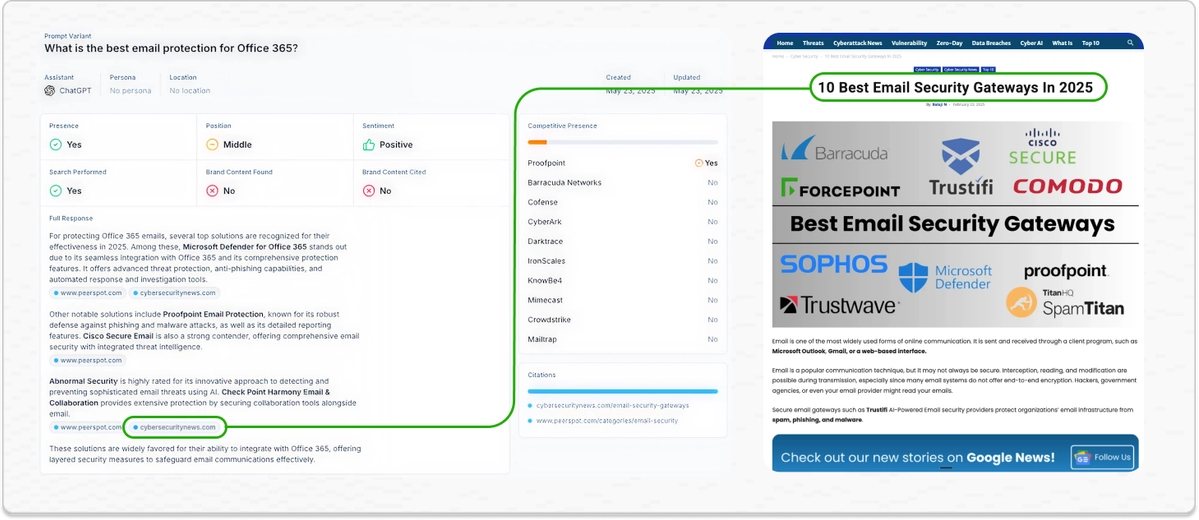

You’ll need a monitoring tool like Scrunch (see our chat with the founder) or the many other tools popping up in the space. These tools help monitor:

- Which prompts are relevant to your category or productWhether your brand is being mentioned in responses

- How your product is being described (tone, accuracy, sentiment)

- Which URLs are being cited, if any

- How competitors are positioned across the same prompts

Walk: Optimize existing content

Once you understand the prompts that might be guiding users towards (or away from) your product, start making your own content easier for LLMs to discover and cite in their answers.

Start with the website content you already control: your homepage, product pages, landing pages, help docs, blog, and integrations pages. These are the most consistent and visible inputs into how AI models learn about and describe your product.

To be citation-worthy, your content needs to be clear, well-structured, and accessible to both humans and AI agents. The goal isn’t just to inform users, but to make it easy for LLMs to extract relevant sections and use them as reliable sources.

- Use direct, claims-based language to explain what your product does, who it’s for, and what problems it solves

- Structure content semantically (H1 → H2 → H3), with short paragraphs, bullets, and standalone sections

- Tie your product repeatedly to specific use cases, tasks, and audiences

- Keep language consistent across pages—use the same phrasing for product names, categories, and benefits

- Make key content technically accessible (avoid JS-only rendering, modals, or hidden tabs)

- Update stale pages regularly and show timestamps or changelogs

- Include alt text and structured data where appropriate to improve extractability

The majority of citations still come from traditional web content. Start by identifying pages that match high-value prompts but aren’t getting cited, and improve structure, phrasing, and clarity to close the gap.

Run: Create content for citation

Use prompt monitoring to guide the creation of new content.

Focus on building pages that map to high-value questions your users ask—and that LLMs are likely to cite.

- Target specific prompts with clear headers (e.g., “Best X for Y,” “How to migrate from Z”)

- Structure pages with summaries, lists, and modular sections that can stand alone

- Lead with clear, verifiable claims; avoid hedging or vague generalities

- Keep content fresh and visibly updated

- Use structured formats (FAQs, tables, how-tos) and semantic HTML

- Reinforce authority with author bylines, organization schema, and strong framing

Good citation content is easy to extract, easy to trust, and easy to reuse. Write for that.

Build a distributed presence

Eventually, praising your own product won’t be enough. LLMs will only get more discerning – they’ll want to see that other voices in the community have gained value from the products they recommend.

Over time, you want to cultivate organic, earned product mentions outside of your own content. At some level, this is just good marketing – every marketer wants to spread knowledge and positive sentiment about their product to the right people, at the right time.

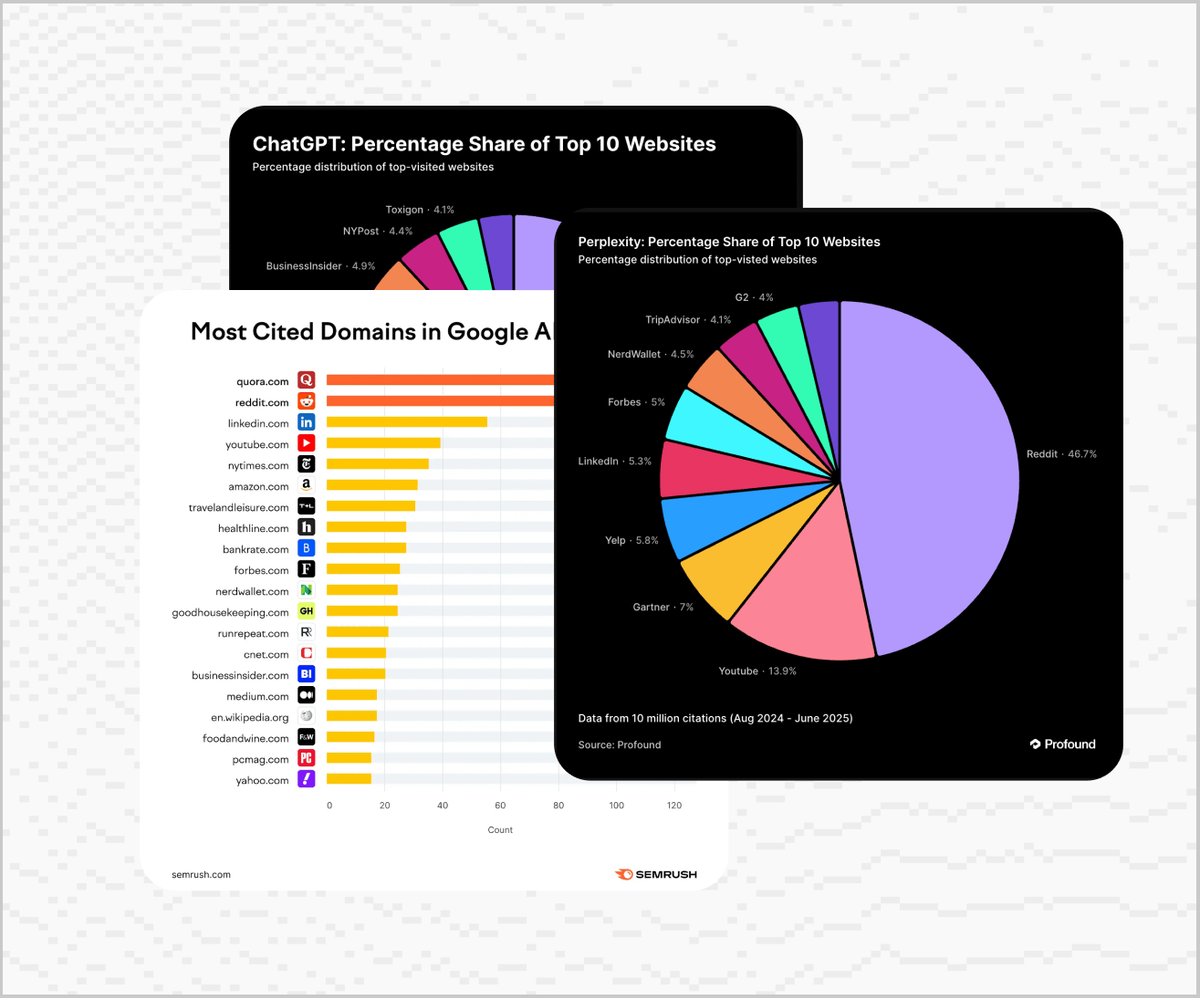

For many prompts and especially Google's AI Overviews, the most cited domains are public, open, community-driven platforms. Reddit and Quora top the list. GitHub, LinkedIn, and YouTube aren’t far behind.

This is a long game — like the new era of linkbuilding, but way more genuine and less spammy.

What you can do tomorrow:

- Publish valuable content that others will reference—teardowns, comparisons, how-tos

- Monitor mentions in Reddit threads, Quora answers, GitHub discussions, or Slack communities

- Engage with questions in your space using language consistent with your own site

- Build indexable profiles on public platforms that LLMs scrape

Don’t just post links—contribute practical context, then earn the mention

Next up, we’ll go deep on prompts

We’ve barely scratched the surface of GEO. Every part of this workflow—from prompt tracking to content structure to external mentions—deserves its own deep dive. We’ll cover each in detail in the next installments.

Up next: prompts. How to find them, track them, and figure out which ones actually matter for your product.

Subscribe to get future guides in your inbox. And if you want more context now, check out our podcast with Chris Andrew, CEO of Scrunch.